The times they are AI-changin’

The societal changes that will come with image generation Artificial Intelligence, highlights on this week's cybersecurity and a choice of infosec resources

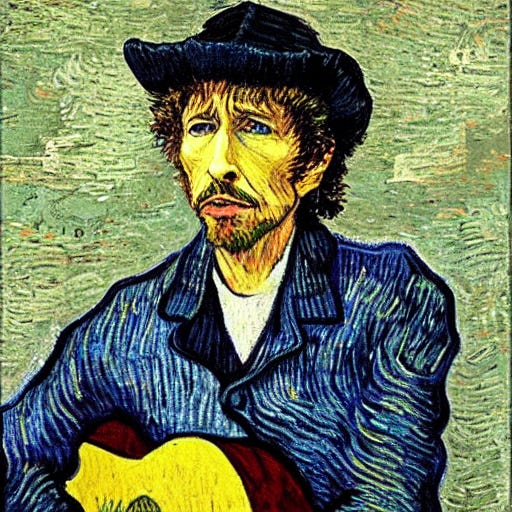

The Open Source release of Stable Diffussion got me thinking about the big breakthrough it will be for the Internet and for our society as well. For those of you who are not familiar with the current trend of text-to-image Artificial Inteligence, Stable Diffusion is an open-sourced AI model that can turn whatever we describe with words into an image. It is not the only AI capable of doing so, but it is the first one to be open-sourced. 2022 has been a crazy year for this kind of technology: OpenAI’s Dall-e 2, Google Imagen, Dall-e mini, Google Parti, Meta’s make-a-scene, Microsoft’s NUWA, Midjourney,…

Until now, all of the available AIs have been under the full control of their developers, who could impose any restrictions on the images generated with their technology. For instance, Dall-e 2 cannot create any images depicting famous people, nudity, or violence. However, Stable Diffussion does not have any of these constraints, opening the possibility of easing the process of creating deep-fakes, violent images, or even adult content.

Anyhow, not having restrictions on the images generated by the AI is not what is shocking to me, but the deep changes that are about to start in society. What about the work of artists, designers, and illustrators? Will these AIs become tools that will help them in their creative process or will they become a substitute for their work?

I always thought that creative tasks would be the last ones to be automated by AIs. I have been used to the automation of repetitive tasks, for example, the ones at a factory, and I think I am not the only one thinking this way. The automation of repetitive tasks is viewed positively by humans because those tasks typically have less value than more creative ones. However, this new trend is breaking the paradigm that we were used to.

Not only is image creation a task being performed by AIs, but other creative tasks are also performed nowadays incredibly well by AIs, such as code completion with Github Copilot or music track separation with LALAL.AI. Let’s not forget automated driving, which in its current state is able to drive safer than humans (under favorable conditions).

So, there is a clear question to ask ourselves: Are we going to go jobless? Well, I am pretty sure there will be employment destruction derived from the massive adoption of these technologies, as there always has been when society adopts any revolutionary technology. However, new jobs will be created, jobs we were not able to imagine years ago. Take the example of any image generation AI; right now, these AIs respond to a textual input but the results need to be checked by a human to make sure that they look realistic and that they fit with the description that we wanted to be represented in the form of an image. Imagine now that these AIs could have fine tweaks in their input such as more complicated parameters, not only words. Most likely this task would be carried out by someone who understands the underlying technology. This task will be performed by what is known as a prompt engineer. I never imagined that such a job could ever exist.

Let’s go one step further and imagine the next AI capable of not only creating images but creating movies by being fed just a text script. Even more, let’s add an AI that would create the soundtrack of the movie that created the AI on its own. Isn’t it surprising and scary at the same time?

We are about to live a moment of breakthrough change, just like when social networks started becoming popular or the popularization of the Internet and the Web.

🔒 Highlights on cybersecurity this week

Inappbrowser.com is a tool that let’s you check what JavaScript commands get injected through an in-app browser. Felix Krause, the creator of the tool, has performed some testing on the in-app browser of various iOS applications with worrying results.

Apps like Instagram, Facebook or FB Messenger have the ability to modify the page that is being shown in the in-app browser. However, the most intrusive one is TikTok: when opening a website from within the TikTok iOS app, they inject code that can observe every keyboard input. Therefore, they could be collecting critically sensitive information such as passwords, credit card information, etc.

This Twitter thread from the creator gives more insight on his findings:

A whistleblower on Twitter: Peiter Zatko a.k.a Mudge, former head of security at Twitter was fired on January 21 by Parag Agrawal in one of his first official acts as Twitter CEO. Recently, Mudge has filed an 84-page complaint to the Securities and Exchange Commission, the Deparment of Justice and the Federal Trade Commission.

Some people see this complaint as a huge advantge for Elon Musk in his trial against Twitter for having cancelled its acquisition.

Mudge states in the complaint that security practice at Twitter was a disaster. I am sure we are going to have some really juicy headlines when the Musk vs. Twitter trial starts in a couple of months.

The OpenSSL 3.0 FIPS Provider has had its FIPS 140-2 validation certificate issued by NIST & CSE.

Dirtycred (CVE-2022-0847) is a newly discovered privilege escalation method that can overwrite any files with read permission on Linux. It was presented at Blackhat USA 2022. This new technique is data-only with currently no mitigations on upstream kernel. Exploits based on Dirtycred could work across different kernels without the need of re-writing their code, making the exploits universal. According to the researchers who discovered it, this method could take advantage of existing vulnerabilities that have double-free ability to perform privilege escalation and even container escaping.

There is a public GitHub repository https://github.com/Markakd/DirtyCred in which they have published a Proof-of-concept of the technique taking advantage of an already existing vulnerability CVE-2021-4154 and CVE-2022-2588. The repository also contains the code of a potential fix to the Linux Kernel to prevent exploiting with this technique.

An encrypted zip file can have two valid passwords TL;DR: Zip files with password protection use PBKDF2 to generate they encryption key for ciphering the contents. However, if the password is longer than 64 bytes it uses the SHA-1 of such password as the input for the PBKDF2 function. Therefore, for a file that used a sufficiently long password, we could use the original long password or the SHA-1 string representation of that password as valid passwords for that file.

♥ My favourite things

Dungeon Master - Clever Floppy Disk Anti-Piracy A video from Modern Vintage Gamer in which he explains with astonishing detail how a clever anti-copy mechanism was implemented on floppy disks.

Infosec online resources

What is Lattice-based cryptography? Is a nice website that has compilation of different resources to learn more about this quantum resistant algorithm. Most of the resources, however, require some degree of math knowledge. If you are interested in lattice-based cryptography and, like me, find this materials hard to understand, you can start with Introduction to Lattice Based Cryptography

Twitter thread about penetration testing labs:

Hijack Libs is a project that provides a curated list of DLL Hijacking candidates. A mapping between DLLs and vulnerable executables is kept and can be searched via its website.